Matthew

9/23/2024

In today’s digital world, speech chatbots are becoming increasingly popular. This technology allows companies to interact with their customers in a new way by understanding and responding to human speech. But how exactly does a speech chatbot work? What are the underlying technological processes, and why are latency and precision particularly challenging? In this blog post, we will take a detailed look at how a speech chatbot functions to provide a deeper understanding of the technology and explain the associated challenges.

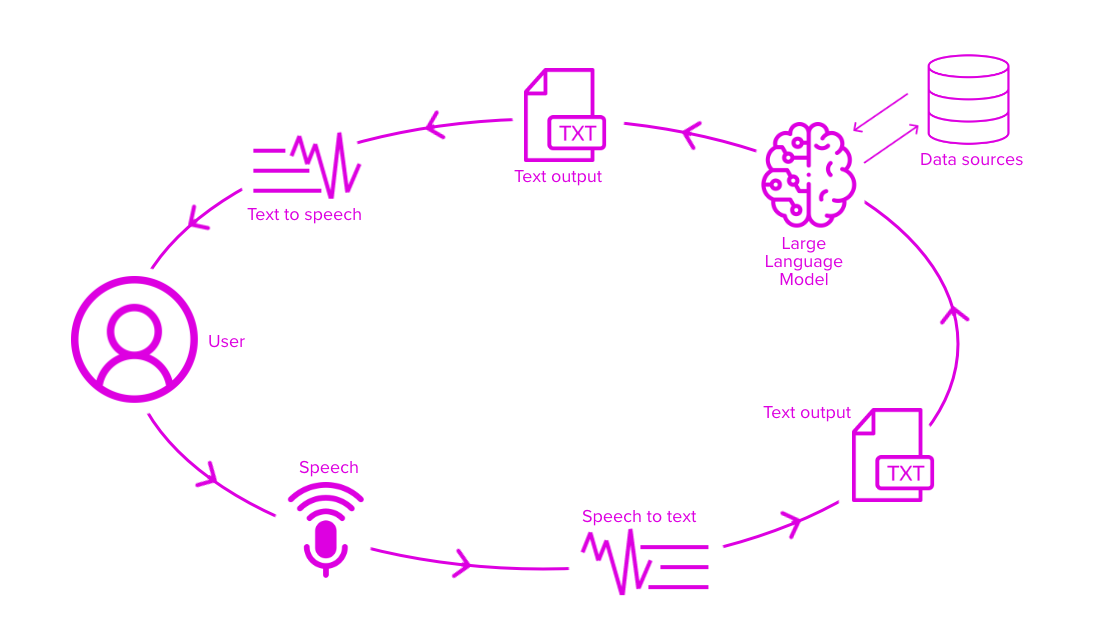

A speech chatbot is an application of artificial intelligence (AI) that enables users to interact with a system through spoken language. This technology combines speech recognition (Speech-to-Text, S2T), natural language processing (NLP), and text-to-speech conversion (Text-to-Speech, T2S) to understand, process, and respond to spoken queries.

The main components of a speech chatbot include:

To better understand how a speech chatbot works, it’s helpful to break down the individual steps involved in a typical interaction.

The first step in interacting with a speech chatbot is converting the user’s spoken language into text. This process involves advanced machine learning models and algorithms for speech recognition.

This process can take varying amounts of time depending on the complexity of the request, the quality of the speech, and network speed. This brings up the first challenge: latency. The quicker the chatbot can convert speech into text, the smoother and more natural the interaction feels for the user.

Once the spoken language is converted to text, the chatbot uses NLP algorithms to understand the meaning of the query. This step involves several subprocesses:

This NLP step is also time-intensive and can lead to delays. A key challenge here is precision: the chatbot must accurately identify the user’s intent and extract the relevant information to generate the correct response.

After analyzing the query, the chatbot must generate an appropriate response. This step can be carried out in different ways:

The challenge here lies in ensuring that the information provided is both accurate and relevant. Generative AI solutions offer significant advantages but also carry the risk of producing inaccurate or irrelevant responses.

Once the response is generated, it is converted back into spoken language through the T2S component:

Here again, latency can occur, as the conversion from text to speech requires computing power. Reducing delays is crucial to optimizing the user experience.

One of the biggest challenges in developing a speech chatbot is latency—the delay that occurs between the user’s input and the system’s output. Several factors contribute to latency:

Each of these delays can negatively impact the overall performance of the chatbot. To minimize latency, developers must ensure that the underlying infrastructure is optimized and that the models and algorithms used are efficient.

There are various strategies to reduce latency:

In addition to latency, precision is a key concern when developing speech chatbots. The chatbot must accurately understand the user’s intent and provide a relevant, precise answer. This is often difficult, as natural language can be complex and ambiguous.

Developing an effective speech chatbot requires a careful balance between latency and precision. While a fast response time is crucial for improving user experience, it is equally important to ensure that the information provided is accurate and relevant. Therefore, companies must invest in modern technologies and infrastructure to tackle these two challenges.

In summary, implementing a speech chatbot is no simple task. It requires thoughtful planning, the use of advanced AI models, and continuous optimization to ensure that the chatbot performs both quickly and accurately. Only then can it meet user expectations and provide real value.

Businesses that invest in the development and optimization of speech chatbots can reap significant benefits by improving customer communication, creating more efficient workflows, and unlocking new business models. The ongoing advancement of this technology, particularly through solutions like izzNexus, which allows companies to integrate AI with their own data sources, will be critical in overcoming the challenges of latency and precision. izzNexus offers full integration of speech-to-text and text-to-speech technologies and can be used as a versatile feature. This functionality is especially valuable for applications requiring accessible interactions, voice control, or automation of voice-based tasks.

The integration of AI solutions capable of real-time speech processing and leveraging vast data sets will become increasingly important in the coming years. Companies should therefore familiarize themselves with these technologies early on to fully capitalize on their potential.